BluelightAI Research Fellowship

At BluelightAI we believe that understanding how AI models work will be a key factor in ensuring that these models benefit humanity. We’re using topological data analysis and mechanistic interpretability to get insights into models’ internal functioning, and building tools to leverage those insights in real-world scenarios. Some things we’ve been working on recently include training cross-layer transcoders for Qwen 3, using CLT/SAE features to train interpretable classifiers, and using SAE features to investigate patterns in model performance.

We’re excited to open a number of research fellowship positions for students, postdocs, or others who are interested in getting deeper into mechinterp and TDA. These will be high-velocity collaborations with BluelightAI team members to make discoveries about how AI models work. These will be remote collaborations—applicants from all around the world will be accepted. If you have experience with TDA, LLM training, or mechanistic interpretability, we’d love to work with you!

Circuit tracing with the Qwen3 Cross-layer Transcoders

A circuit-level analysis of how Qwen3 produces specific predictions.

Cross-layer transcoders (CLTs) were originally developed to help find circuits in large language models. Circuits are collections of components in the model through which we can causally trace the model’s logic as it produces an output, explaining why the model produces the output it does for a given input. For a circuit-based explanation of a model to work well, it should do a few things.

The circuit should be made up of pieces we understand. In our case, these pieces will be groups of CLT features.

The circuit should tell a story. We should be able to identify different steps in the model’s computational process and why those steps make sense to do. For a particular prompt, we will look for computational connections between these groups of features.

Finally, the circuit should let us intervene in the model’s computation with predictable effects. To test this, we will steer groups of features and observe the effect on the model’s prediction.

Cross Layer Transcoders for the Qwen3 LLM Family

Digging Into Interpretable Features

This post was originally published on Less Wrong

Sparse autoencoders SAEs and cross layer transcoders CLTs have recently been used to decode the activation vectors in large language models into more interpretable features. Analyses have been performed by Goodfire, Anthropic, DeepMind, and OpenAI.

BluelightAI has constructed CLT features for the Qwen3 family, specifically Qwen3-0.6B Base and Qwen3-1.7B Base, which are made available for exploration and discovery here. In addition to the construction of the features themselves, we enable the use of topological data analysis (TDA) methods for improved interaction and analysis of the constructed features.

Introducing Cross-Layer Transcoders for Qwen3

A New Step Toward Understanding How Qwen3 Represents and Transforms Information

Today, BluelightAI is releasing the first-ever Cross-Layer Transcoders (“CLTs”) for the Qwen3 family of models, beginning with Qwen3-0.6B and Qwen3-1.7B. These CLTs make it possible to examine how Qwen3 encodes concepts, propagates information, and composes meaning across its layers.

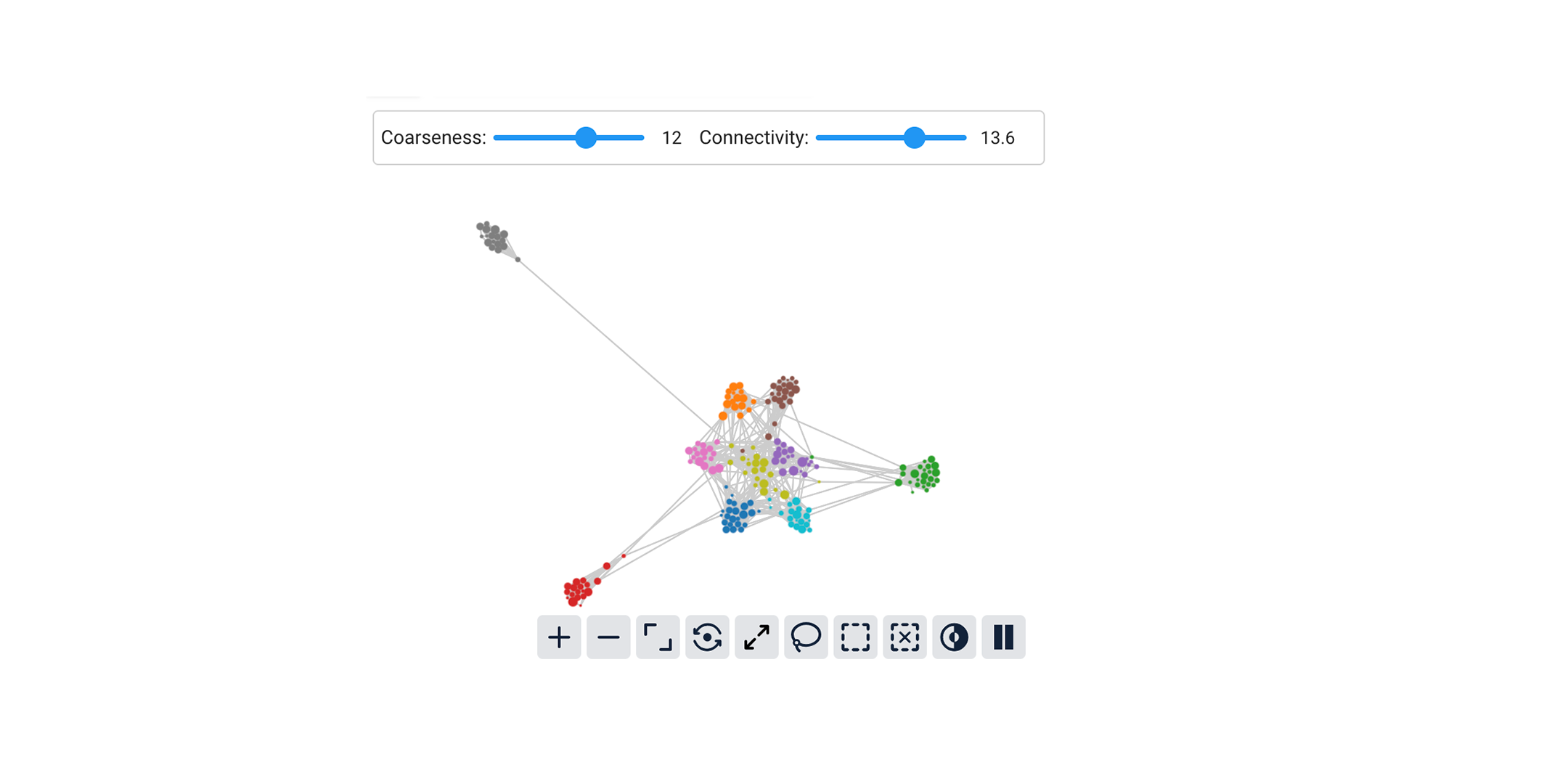

Alongside the CLT release, we are launching a dashboard to explore the features discovered. The Qwen3 Explorer provides an interactive environment for studying learned features, tracing activation flows, and visualizing the model through Cobalt’s topological data analysis.

Together, these components make Qwen3 one of the most interpretable open-source model families available.

From Black Box to Audit Trail: How BluelightAI Makes LLM Decisions Explainable

Enterprise teams are excited about what large language models can do. They can read messy text, spot patterns, and generate fluent answers in seconds.

But for high stakes decisions, one critical question remains unanswered:

Why did the model decide that?

If you are approving or denying claims, flagging fraud, or making risk decisions, a fluent answer is not enough. You need a clear, defensible explanation that a human can understand and an auditor can follow.

BluelightAI exists to provide exactly that.

The Regulatory Horizon for AI Companies: What to Know and How to Prepare

Why it matters

AI is moving from a “nice-to-have” technology into a highly regulated domain. For companies building, deploying, or integrating AI systems, regulatory risk is real: non-compliance can mean substantial fines, reputational damage, increased liability, and market exclusion. At the same time, well-designed governance of AI systems can become a differentiator: trusted, transparent systems earn customer and partner confidence.

Below is a breakdown of the current regulatory regime (global but with emphasis on the EU and U.S.), the exact wording of key provisions you should watch, and compliance steps for AI companies. At the end, you’ll see how BluelightAI’s Cobalt helps embed transparency, auditability, and decision-understanding into your AI stack — helping you reduce regulatory risk and build trust.

Signal Processing for AI

Solving Model Errors at the Source

Signal processing consists of the extraction of information usable by a mathematical model from raw data. It is a critical element in many engineering domains, including imaging, audio, speech, radar, and many others. It includes filtering tasks to remove noise, say in images or audio, Fourier transform techniques for audio, as well as more complex tasks such as location and reconstruction of objects using radar or sonar.

Graph Modeling for Mechanistic Interpretability

Turning Complex AI Models into Searchable Graphs

The problem of extracting human-understandable information from large, complex, and noisy text or image data is one of the fundamental challenges facing artificial intelligence. Modern AI models (esp. LLMs) can deliver amazing outputs, but their decision-making processes often remain hidden, creating a “black box” that makes it difficult to know why models fail or succeed.

BluelightAI’s flagship interpretability platform, Cobalt, directly addresses this AI black box problem by leveraging Topological Data Analysis (“TDA”) as a foundational technology, a critical differentiator unmatched by existing evaluation platforms. The fundamental idea behind TDA is that for many kinds of data, traditional algebraic tools are not flexible enough to represent data as effectively as we would like.

Topological Feature Generation for Speech Recognition

How smart feature engineering improves speech recognition

In our earlier blog we showed that topological techniques can be used to improve the performance of convolutional neural networks being used for image classification. Specifically, we used features parametrized by a geometric object called a Klein bottle as well as an architecture guided by the same object to drastically speed up the learning rate, but more importantly to improve generalization. We believe that generalization is a good measure of progress toward Artificial General Intelligence.

Using TDA and Sparse Autoencoders to Evaluate TruthfulQA

When we used Cobalt to analyze an LLM’s performance on the TruthfulQA dataset, we discovered five distinct failure groups of questions that the model underperformed on.

We characterized them as follows:

fg/1 (questions confusing two well-known figures with the same first name)

fg/2 (questions about geography)

fg/3 (questions about laws and practices in different countries)

fg/4 (pseudoscience and commonly misattributed quotes)

fg/5 (questions that try to confuse fact with opinion)

In this post, we’ll use some more advanced tools from topological data analysis and mechanistic interpretability to go even deeper into the model’s performance and the TruthfulQA dataset. Specifically, we’ll use a set of sparse autoencoders trained on the Gemma 2 model to better understand what the model sees when it looks at the TruthfulQA questions.

Feature Engineering for Language Models

Using parts of speech to improve language model performance

V. Lado Naess, L. Sjögren, D. Fooshee, G. Carlsson

In our earlier post “Improving CNNs with Klein Networks: A Topological Approach to AI,” we observed that adding predefined interpretable features to a CNN, and even modifying its architecture, resulted in significant improvement in its performance. Speed of learning was of course greatly improved, but it turned out that generalization was also greatly improved. Moreover, the insights thus obtained allowed us to construct new features for video that greatly improved performance on a video classification task. These observations led us to ask if the addition of predefined interpretable features to Large Language Models could also lead to improvements in their performance. In this post we report findings from experiments we performed on a language model by adjoining features constructed from parts of speech tagging applied to the input data.

Why Your AI Needs Cobalt: Adapt, Diagnose, and Deploy with Confidence

Adapt AI to Your Use Case

The Challenge: Adapting State-of-the-Art Models to Industry-Specific Use Cases is Hard

Transforming a general-purpose model into an AI specialist for your industry is an ongoing process that continues throughout the lifecycle of the model. AI apps and agents are challenging to adapt because the interactions that occur are varied and constantly change with time. Consequently, enterprises are behind the curve in deploying LLM-based specialists and agents because they are not confident in their predictability and reliability (e.g. incorrect responses, hallucinations).

Our Solution: Cobalt – Mechanistic Interpretability for Model Evaluation

BluelightAI is a platform that helps users evaluate, adapt, and improve state of the art models for their use case. This platform provides a hub for comparing datasets, evaluation metrics, and models (open and proprietary) with specific, actionable insights into their capabilities and blind spots.

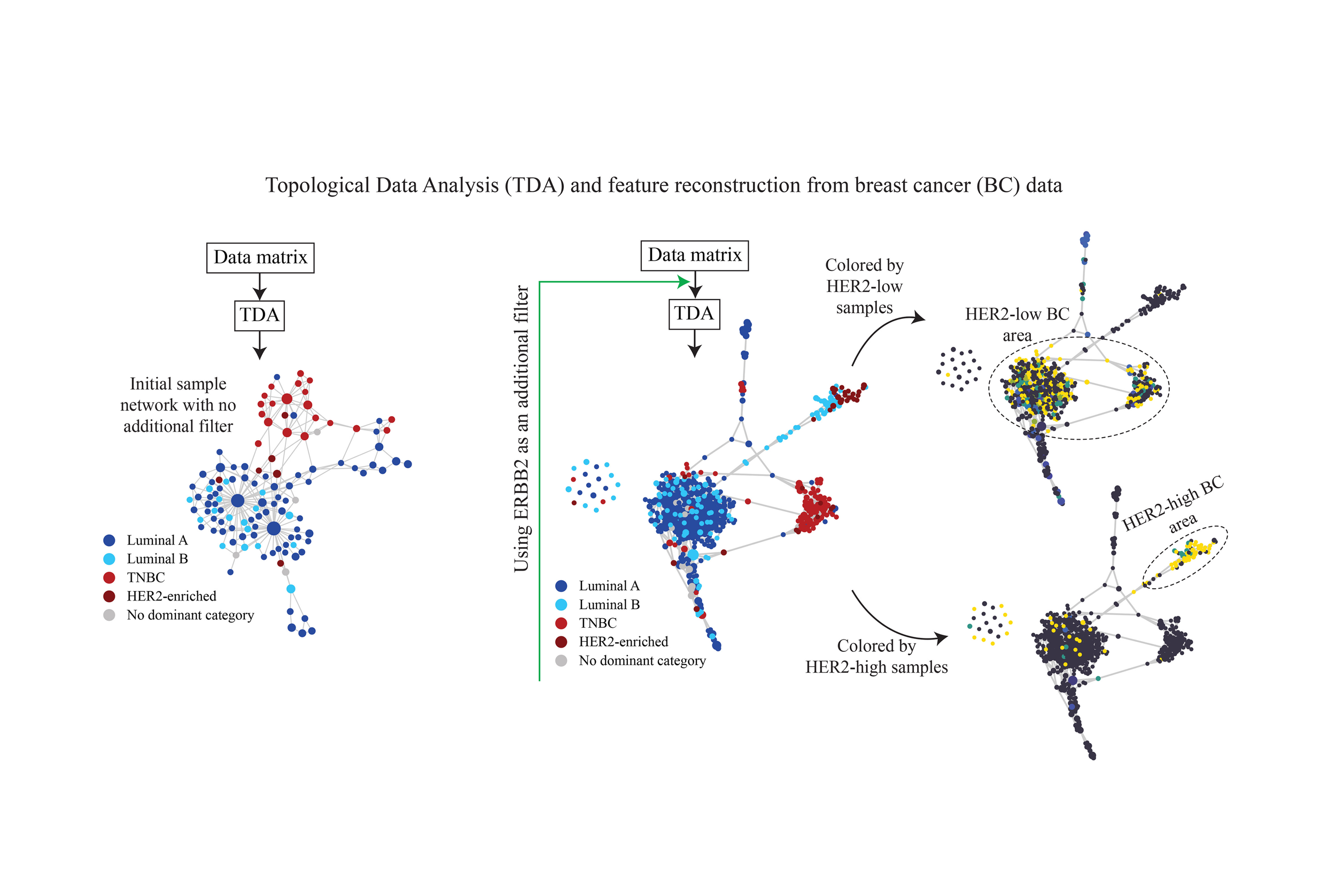

Mechanistic Interpretability in Practice: Applying TDA to Breast Cancer

This paper, which we shared last week, is a demonstration of how topological data analysis methods can be used for feature engineering and selection for data sets where there are many features, or columns in the data matrix.

In our Less Wrong post we pointed out that when a data set is “wide”, i.e. includes a large number of features, it is useful to compress the feature set into a graph structure where each node corresponds to a set of features. This gives an overview of what otherwise might be a feature set which is very hard to understand. Each data point can also be treated as a function on the nodes of the corresponding graph, so that one can examine and compare data points or collections of data points by “graph heat maps”, i.e. colorings of the nodes representing the functional values.

Mechanistic interpretability serves as the unifying macro concept here, as both sources leverage feature compression and graph-based visualization to transform high dimensional, opaque systems into interpretable structures that reveal underlying relationships. This paper studies a particular wide data set, where the features correspond to genes, with the entries being gene expression levels.

Topological Data Analysis Reveals a Subgroup of Luminal B Breast Cancer

This paper was originally published in the IEEE Open Journal of Engineering in Medicine and Biology.

Impact Statement:

High dimensional data is difficult to visualize or analyze. Reducing the dimension of data while preserving its veracity has become a key challenge for data science. A key tenet of data is its shape that can be topologically defined. In this study, we use the shape or topology of data to infer features that potentially carry valuable information. We use BC data as an example owing to its importance and complexity. The heterogeneity of BC data makes interpretation and prognosis difficult. We show that topological analysis of BC data and its graphical representation provide a natural subtyping in addition to parsing the associated features. Interestingly, the topological analysis points to a novel HER-2 positive luminal subtype, suggesting alternate therapeutic interventions.

Next Generation AI Model Evaluation

Go beyond the leaderboard: How TDA uncovers what benchmark scores miss in model evaluation.

The evaluation of models is absolutely critical to the artificial intelligence enterprise. Without an array of evaluation methods, we will not be able to understand whether the models are doing what we want them to do, or what measures we should take to improve them. Another reason for the need for good evaluation measures is that once an AI model is deployed, we will find that the input data, the interaction of users with the model, and the user reactions to the output of the model will change over time. This means that not only do we need evaluation at the time of construction of the model, we will need to evaluate continually throughout the deployment lifecycle of the model.

New Release: Cobalt Version 0.3.9

Cobalt Version 0.3.9 is now available. You can install the latest version by running pip install --upgrade cobalt-ai

Features:

Group comparison in the UI now supports a choice of different statistical tests for numerical features.

In addition to the t-test, the Kolmogorov-Smirnov test and the Wilcoxon rank-sum test are supported, as well as a version of the t-test that uses permutation sampling to approximate the p-value instead of the t-distribution.

Workspace.get_group_neighbors() is a new method that finds a group af nearby neighbors of a given CobaltDataSubset. This neighborhood group can also be used as Group B in the group comparison UI.

The graph layout algorithm has been substantially improved and now presents cleaner, easier-to-read graphs. Some configuration options are available in cobalt.settings.

Improving CNNs with Klein Networks: A Topological Approach to AI

This article was originally published in LessWrong.

In our earlier post, we described how one could parametrize local image patches in natural images by a surface called a Klein bottle. In Love et al, we used this information to modify the convolutional neural network construction so as to incorporate information about the pixels in a small neighborhood of a given pixel in a systematic way. We found that we were able to improve performance in various ways. One obvious way is that the neural networks learned more quickly, and we therefore believe that they could learn on less data. Another very important point, though, was that the new networks were also able to generalize better. We carried out a synthetic experiment on MNIST, in which we introduced noise into MNIST. We then performed two experiments, one in which we trained on the original MNIST and evaluated the convolutional models on the “noisy” set, and another in which we trained on the noisy set and evaluated on the original set. The results are displayed below.

Evaluating LLM Hallucinations with BluelightAI Cobalt

Large language models are powerful tools for flexibly solving a wide variety of problems. But it’s surprisingly common for them to produce outputs that are untethered to reality. This phenomenon of hallucination is a major limiting factor for deploying LLMs in sensitive applications. If you want to deploy an LLM-based system in production, it’s important to understand the types of mistakes it may make, and use this knowledge to make decisions about what model to deploy and how to mitigate risks.

In this post, we’ll see how BluelightAI Cobalt can help understand a model’s tendency to hallucinate. In particular, we’ll identify certain types of inputs or questions where a model is more prone to make errors. Follow along in the Colab notebook!

From Loops to Klein Bottles: Uncovering Hidden Topology in High Dimensional Data

Motivation: Dimensionality reduction is vital to the analysis of high dimensional data. It allows for better understanding of the data, so that one can formulate useful analyses. Dimensionality reduction that produces a set of points in a vector space of dimension n, where n s much smaller than the number of features N in the data set. If the number n is 1, 2, or 3, it is possible to visualize the data and obtain insights. If n is larger, then it is more difficult. One interesting situation, though, is where the data concentrates around a non-linear surface whose dimension is 1, 2, or 3, but can only be embedded in a dimension higher than 3. We will discuss such examples in this post.

How to Use BluelightAI Cobalt with Tabular Data

BluelightAI Cobalt is built to quickly give you deep insights into complex data. You may have seen examples where Cobalt quickly reveals something hidden in text or image data, leveraging the power of neural embedding models. But what about tabular data, the often-underappreciated workhorse of machine learning and data science tasks? Can Cobalt bring the power of TDA to understanding structured tabular datasets?

Yes! Using tabular data in Cobalt is easy and straightforward. We’ll show how to do this with a quick exploration of a simple tabular dataset from the UCI repository. This dataset consists of physiochemical data on around 6500 samples of different wines, together with quality ratings and a tag for whether the wine is red or white.