Mapping Concept Evolution in Qwen3

David Fooshee (PhD), John Carlsson (PhD), Gunnar Carlsson (PhD)

We often describe Large Language Models (LLMs) as "black boxes." We observe the input and the output, but the internal machinery – the billions of calculations occurring in between – remains largely opaque. We observe that the model understands concepts, but we rarely discern how it constructs them. It is vital that we understand the “how”, because it will give us better information about how to control the LLMs and AI, and also diagnose possible malfunctions, like the introduction of unacceptable biases or the production of undesirable language or modes of communication. This kind of control will also make the adaptation of LLM technology to specific application domains, such as financial or legal documents, simpler and more direct.

The last couple of years has seen a great deal of work in this direction, under the heading of mechanistic interpretability. One particularly interesting contribution is a paper by researchers at Anthropic. In this paper, the authors study circuit tracing, the identification of graphs of features which are related to each other in that features at one level are shown to affect features at the next level. An important contribution of this paper is the recognition of the importance of “supernodes” in the analysis of feature spaces constructed from LLMs. The idea is that it is often more informative to study coherent collections of features than individual features.

At BluelightAI, we have developed graphical models which allow us to identify meaningful groups of features more simply. These graphical models are a step in the direction of “automated concept discovery”, in that they allow for much simpler identification of groups of features, which we often find correspond to things we would identify as concepts. We are identifying semantic lineages within a specific set of features (cross layer transcoders) for two particular models in the Qwen3 family. Semantic lineages are obtained by selecting a layer, finding conceptually coherent groups (or supernodes) in the features corresponding to that layer, and then identifying features that strongly affect the chosen supernode.

At BluelightAI, we treat interpretability as a rigorous discipline grounded in mathematical structure. To that end, we are proud to release the first Cross-Layer Transcoders (CLTs) for the Qwen3 family of models (Qwen3-0.6B and Qwen3-1.7B).

Using Cobalt, our proprietary software for Topological Data Analysis (TDA), we have mapped the internal representations of these models. By constructing multiresolution topological graphs from over 573,000 discovered features, Cobalt allows us to observe not just static clusters, but the semantic evolution of ideas, demonstrating how a model refines raw, abstract inputs into specific, high-level concepts.

Methodology

To identify the semantic lineages described below, we used the following process using our CLT Feature Explorer for Qwen3-0.6B-Base:

Target Identification: We explored feature coactivation graphs to identify clusters of interesting features within a single layer which “coactivate”, i.e. which tend to activate together. The selected cluster, typically comprising 2 to 5 features, served as our target.

Vector Computation: We computed the mean encoder vector for the target cluster to establish a centroid for analysis.

Backwards Search: We scanned all layers preceding the target layer to identify features with the highest positive virtual weights influencing the target cluster.

Signal Filtering: To ensure relevance and reduce noise, we focused on more frequently activating features, specifically those with an activation frequency greater than 1 in 10000 tokens.

This method works to map concepts in any domain, including all the important domains in the current applications of AI, such as banking and financial services, health care, security, customer intelligence, etc., and gives the ability to trace the contributions from earlier layers to later layers.

Here are some of the structures Cobalt uncovered within Qwen3 using this method.

Case Study 1: From "Problem Severity" to "Software Exceptions"

One of the most distinct topological structures Cobalt discovered tracks the lineage of software errors. We began with a target cluster of three features at Layer 12 related to defining and importing Exceptions in software.

High-Activation Examples:

Tracing this concept backward through the network revealed a logical progression that begins not with computer science and potential errors in software systems, but in a context of medical problems and their severity levels within biological systems.

Layer 9: Real-world Severity

Deep in the model, the features are non-technical. Cobalt identified activations related to severe vs. mild situations—predominantly medical or physical contexts.High-Activation Example: "In severe cases, surgery may be necessary."

High-Activation Example: "Mild preeclampsia occurs in 6% of pregnancies and severe preeclampsia..."

High-Activation Example: "some experience mild symptoms, others can have moderate to severe symptoms"

Layer 10: Validation and Checking

Moving upward, the concept becomes procedural. The activations shift toward validating data and checking results.High-Activation Example: "...therefore validating our cell transition data..."

High-Activation Example: "...repeated at least 3 x to verify our outcomes."

Layer 11: The "Fix"

One layer prior to the target, the concept sharpens into problem-solving. Cobalt highlighted features revolving around "problems" that require "fixing" or "interception."High-Activation Example: "...make it clear to your landlord that this is a serious problem and you want it fixed now."

High-Activation Example: "...objects intended for intercepting calls to instance methods."

Layer 12: The Software Exception

Finally, the model translates this accumulated context – severity, validation, and remediation – into the specific coding concept of an Exception.

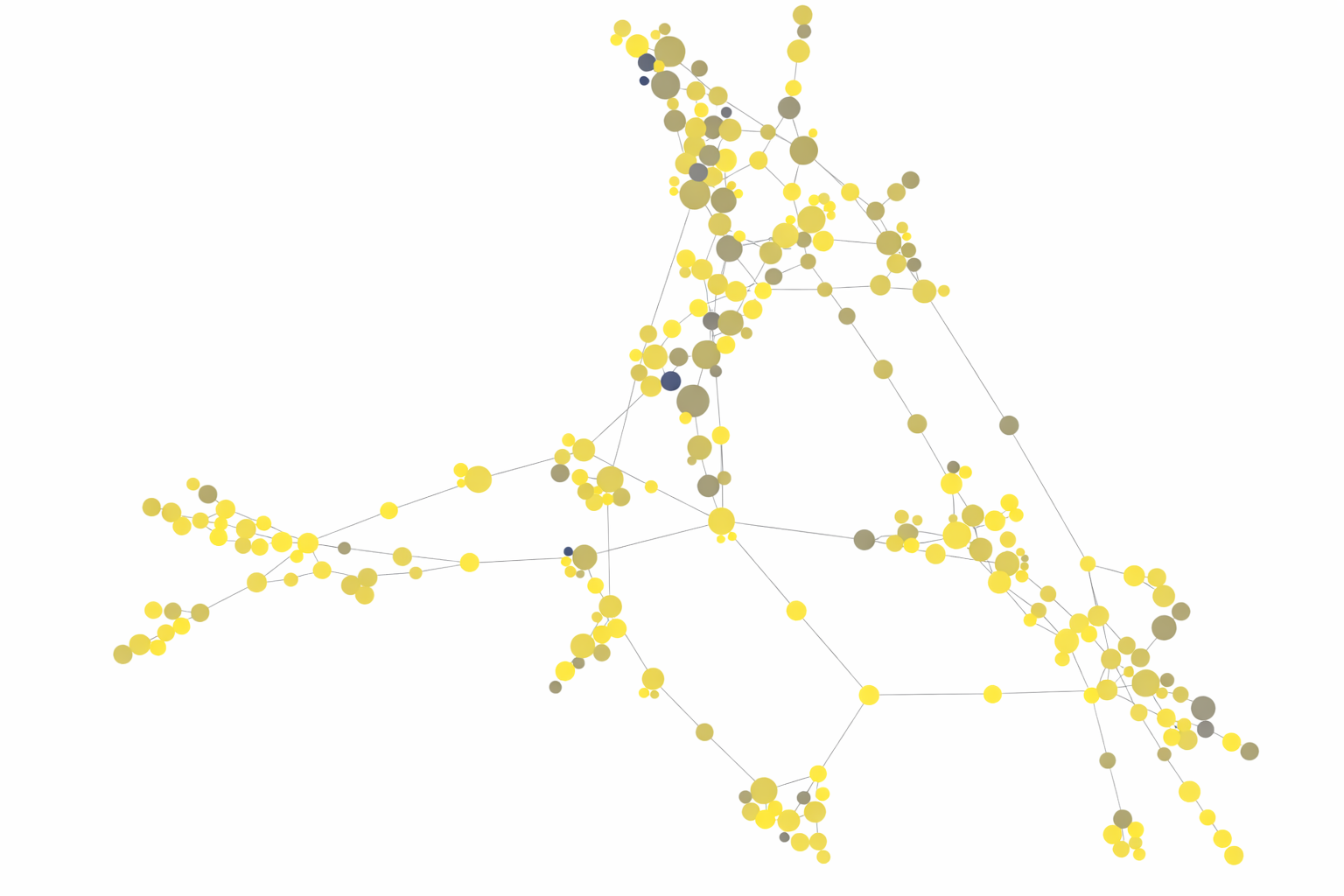

Figure 1. Example feature coactivation graph produced by Cobalt, used to identify target feature clusters. Semantically similar features tend to coactivate and are grouped into nodes, with semantically adjacent features appearing in neighboring nodes. The graph was constructed using a subset of BluelightAI’s Qwen3 CLT features. Node coloring indicates next-token activation frequency (log scale).

Case Study 2: The Geometry of "Progress"

In a second analysis, we examined abstract metaphors: "moving one step further" and "heading in the right direction." Cobalt revealed that Qwen3 constructs these metaphors using features originally dedicated to physical geometry.

"One Step Further"

We analyzed a target cluster of two features at Layer 23 that activate on the phrase "one step further." Cobalt tracked its antecedents to physical motion and numerical comparison:

Layer 17: Physical Movement and Algorithmic Progress

Activations focused on objects being repositioned in physical space.High-Activation Example: "shifting"

High-Activation Example: "moving around"

Activations focused on a parameter update “step” in optimization.

High-Activation Example: “model parameters are then updated to improve …”

High-Activation Example: “parameters are updated using …”

Layer 20: Comparative Power

The concept evolves from movement to relative quality—describing entities that are "more than" or "stronger."High-Activation Example: "rendering 9x faster"

High-Activation Example: "30x more powerful"

Layer 23: The Concept

The concept crystallizes into "One Step Further", an abstract procedural step, built upon the foundation of physical motion.

"Step in the Right Direction"

Similarly, for the target cluster of two features, "step in the right direction", at Layer 23, Cobalt found a lineage steeped in spatial navigation:

Layer 19: Vision

Feature activated on text describing a "vision for where things are headed."High-Activation Example: “Having shared visions and goals”

Layer 20: Directionality

Cobalt identified a feature firing on explicit directionality, such as palindromes.High-Activation Example: "palindrome can be read in the same way from front and from back"

Layer 22: Pathfinding

Immediately preceding the target concept, a feature focused on navigating paths and optimizing routes.High-Activation Example: "...length of the shortest path that visits pn points has length at least psqrt(n)*c."

This approach is a step in the process of obtaining increased automaticity in the discovery of relationships indicative of function in AI models. It permits more efficient discovery of ways in which concepts develop within an LLM. In particular, it automatically highlights groups of features that combine to represent concepts, and to trace how the concepts develop through the language model. We believe these ideas and capabilities will become increasingly important as artificial intelligence is adapted to more and more specific situations, where more knowledge is applicable and where justifications for it are increasingly important.

Why Topological Data Analysis?

Traditional clustering often forces data into discrete groups, which partition data in fixed, rigid ways, often obscuring detail. The ability to work flexibly with groups allows for a more detailed understanding of what the data is saying.

The figure above shows a coactivation graph. Each node represents a collection of features, which have the property that they tend to activate together. It is colored by a measure of the extent to which firing on a particular token implies firing on the following token. We use these graphs to improve the search for groups which are more conceptual, that they tend to be associated with a whole collection of tokens rather than a single one.

Cobalt’s TDA approach respects the high-dimensional shape of the data. By building multiresolution graphs from the 573,000 features extracted by our CLTs, we are able to search them and find:

Transitional States: The intermediate zones where a feature functions as both "medical severity" and "software error."

Branching Paths: How a single concept like "movement" at Layer 17 diverges into "progress" at Layer 23 or perhaps "physical transportation" in adjacent clusters.

Automated Discovery: Cobalt ingests raw activation data and surfaces these topological structures automatically.

Conclusion

Mechanistic interpretability remains a complex scientific challenge. The opacity of the "Black Box" is often a function of insufficient tooling rather than inherent unknowability.

The release of our Qwen3 CLTs and the Cobalt Explorer is a contribution toward better instrumentation. These tools allow researchers to observe the structural transformation of concepts, from physical proxies to abstract reasoning, with greater precision. We make it possible to easily find and map internal concepts, across many different models and use cases. We believe this level of granular analysis is an essential step toward rigorous model evaluation.

To explore these features yourself, visit the Qwen3 Explorer or read our full Technical Report.